What keeps YOU awake at night? I’m very lucky. Not much keeps me awake at night. Thanks to regular exercise, a cold house, early rising and no kids, I’m blessed with eight hours a night. This may seem a pretty odd thing to say, running a small social enterprise in the midst of a pandemic and now a second lockdown. When I don’t sleep however, it’s usually because of something I haven’t done that may put someone else at risk. And this usually relates to safeguarding and child protection.

Understanding the real risks

It’s the bit that separates us all from a normal business. We do all the business stuff too, but we work with people as our mission determines. Of paramount importance is our responsibility for the safety and wellbeing of people who are more likely to come to harm than the general population. Why then would anyone choose to put more risk of harm in their way? And yet that is exactly what we’ve all done by moving everything online.

As we all use multiple platforms and encourage people to engage with us in this way, what risks are we encouraging people to take and can we mitigate them when we don’t know what they all are ourselves? From WhatsApp to Zoom, the security settings, the GDPR implications and our own use of public wifi, they all pose digital challenges and risk way beyond our competence.

As the Online Harms Bill passes its way through parliament, you don’t have to have children to be alarmed by some of the issues it’s trying to curb. 99% of 12-15 year olds are now online to some extent. Parents have significant concerns about what their children are accessing online and not without good reason. From spreading terrorism, to scams, online porn, shopping and fake news, the Internet is a place of huge unknown risk. The Internet is also a place of community, support, resources, cost-savings, fun and diversion. Most importantly, denying it isn’t an option. So what is our responsibility as a sector, as employees or employers, as parents, members of the community and custodians of children’s safety and how do we ensure our little bit of the world is safe?

I admit to some frustration when public sector colleagues adopt clunky systems when more attractive user-friendly online newsletter applications exist or when social workers don’t use WhatsApp to engage with young people. As we rushed to embrace these technologies at Your Own Place during the good times, following brief analysis of their functionality, I paused briefly to wonder whether a more risk averse approach might in fact be the right one. Led by wanting to overcome the barriers to building great relationships and being efficient, we forged ahead with using tech whilst knowing little about it and what sits behind it. As I seriously make plans to bring digital capacity into the team, I realise that I really don’t know enough even to write the job description. How is it that at the other end of the spectrum we have driverless cars!

As outlined in Blog 2, at Your Own Place we have embraced the Cloud, multiple digital platforms for the back office as well as ways to communicate with the people we support, our own employees, volunteers and partners. Not to do so would feel perverse, stubborn and even immoral in a world ‘digital by default’. But if our organisational desire to embrace tech doesn’t align with our capability and capacity to keep people safe, does this mean the sector shouldn’t and can’t forge ahead?

Recognising knowledge gaps

Having attended in 2019 a fascinating seminar on the use of Artificial Intelligence (AI) in the sector by Liam Cahill, I suddenly felt like the Luddite that many people expressed themselves to be following my last blog. Whether as advice chatbots, analysing data-sets or making donations, it would be naive to think that because of the human interaction involved in our work, there is no threat to us as well as opportunity.

The ethical arguments about AI have to be had as well as those about the implications of AI for humans doing human facing work and for our role as job creators. Our sector has scarcely begun to make use of technology and I honestly don’t think we know enough to simply dismiss AI as unethical. Rather we are not using it well because we don’t have the expertise or skillset to make strategic decisions about whether to invest in it or not. From workers in the field, management and leadership as well as governance, I have witnessed anything from outright refusal to adopt technology to alarmingly low levels of understanding. Catch22 in their Online Harms Consultation cite 38% of practitioners feeling insufficiently trained to deal with online harm. This lack of expertise across the piece has huge ramifications for keeping people safe.

Some of those ideas outlined above are the big tech issues and not the ones that keep me awake. What specifically keeps me awake is not AI, but relates to an increase in Child Sexual Exploitation (CSE) online and financial scamming of people with already limited funds. The online abuse and bullying that so many young people experience on social media as well as their deteriorating mental health should be of huge concern to us all – especially now. Balancing mission, risk and accessibility, choosing which platforms we should use and what this means for the team and online safety, are daily concerns. Maybe AI should be keeping me awake as one of the biggest threats and opportunities to our sector and that fact it isn’t is indicative of my lack of understanding.

Being brutally honest, the sector just isn’t getting the support it needs with this. As a result we’re doing it ourselves – and doing it badly, often unsafely or just not at all – which is unsafe too. Into the sieve at one end from the local authority programmes goes Child Protection training, County Lines awareness raising and GDPR workshops, whilst no attention is paid to the risk our online systems and tools pose and the practical support that we need. From social media and its private messaging, to using our own computers on public wifi and during lockdown, providing devices – if we were truly aware of all the risks we probably would end up doing nothing at all. As we enter another lockdown, it’s just possible that this would be worse than the risks themselves. That seems like a bit of a Hobson’s Choice to me.

Building on the foundations

So one option for many is to choose not to deliver interventions until face to face resumes. As we headed into the first lockdown, this was a real possibility. It is seemingly impossible to overcome digital exclusion in all its complexity with our capacity and as a third sector organisation AND keep people safe. We are not an essential service and therefore pausing, whilst likely putting us out of business, would have simply been more collateral damage to the sector. Of course, as founder, this wasn’t an option. At a time of great uncertainty we had a role to fulfil. Even during a pandemic, people are still moving to independence, needing new skills and needing our support. Arguably, our people needed us more than ever. The good news then is that on some technological solutions, we weren’t starting from a base-line of using no tech at all and not having a clue. And this is the case across the sector – we all have something to build on.

Our delivery and 1-2-1 conversations have for some time been ubiquitous via WhatsApp and whilst Zoom was new to the people we support, the concept of video calls was not. We invited people to engage more with us via social media, YouTube, via our newsletters, website, calls, emails and other messaging.

Our values always sit behind our approaches and for online delivery and as we move towards group delivery, our aim is to retain the fun and interactivity as routes to engagement and trust. From trust you can have safety conversations too and that takes time. But we all had to move fast. We know in the real world where Mutual Aid groups flourished during the first lockdown that some risks were probably taken. In a crisis, you take a few risks.

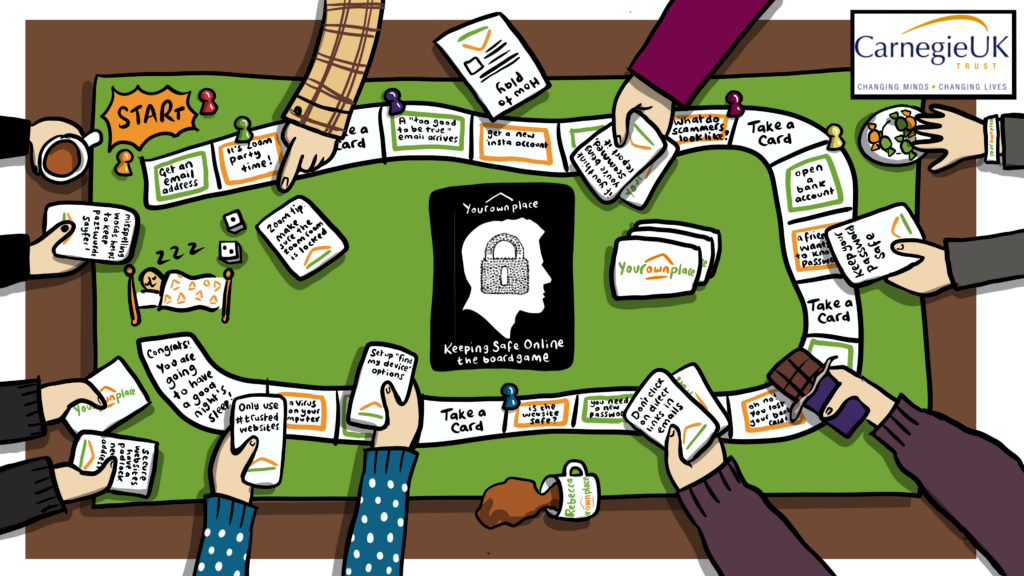

In our group online delivery, in addition to our Zoom ice-breakers, introductory sessions, polls and interactive screen drawing we developed Zoom guides as well as visual guides on how to create safe email addresses and passwords. These were developed for a nearby dementia music charity who, like many others, discovered the irony that most of the help with getting online, is online! Those questions about people’s digital skills, knowledge, connectivity, resilience and confidence have to become the norm if we are to build a picture of the digital exclusion barriers alongside the risks and then the solutions.

Setting boundaries

#GetOnlineWeek in October provided a helpful reminder of both our role in supporting people to stay safe online and all the small things we can all do as an organisation too. The development of us and the support we provide go hand in hand.

One example of a potential risk is Instagram. With Instagram being the social media platform most aimed at those we support, we’re aware that we will likely receive contact from people via private messaging who may be in distress. Just last week a young man messaged via Facebook at 11.30pm on a Friday. There was no suggestion of a crisis, but he wouldn’t have messaged if he hadn’t needed help either.

For this reason real-world congruence and boundaries are everything. From day one, with a team encouraged to be active on social media, our workplace boundaries have been in place. Whether digital or not, our people know that we are not a crisis service and that we don’t run an out of hours provision. This conversation forms part of staff induction and relationship building, but equally has to evolve as we grow, platforms get used differently, taken over by other members of the team and new challenges occur. We responded to the man in question after 9am on Monday. It wasn’t an emergency, but was something we can help with. Contact in this way isn’t going away so it’s simply no good putting our sector head in the sand or throwing up our arms with the excuse ‘I don’t like Facebook’ or ‘I don’t use it in my private life, so I don’t understand it’. The people we support do.

With a new safeguarding lead in our Operational Manager, Digital Safeguarding forms part of that role. We have a huge amount to learn and like many, are learning as we go. With an open and blame-free culture, we can ask about whether we understand if phone numbers are visible in a WhatsApp group message or challenge weekend Twitter posting. Trusting people with common sense is important and balanced with not making assumptions that just because people DO use Facebook in their private lives that they understand its settings and risks.

Forging ahead

We have so far to go on this part of our digital journey and honestly have just begun. It will continue to keep me awake at night because it is moving at such pace and I can’t possibly keep up. It seems this answers the question about how we move forward – by bringing this expertise into the team to keep people safe because we all have a responsibility. Ultimately, we have to care about our people and to want to keep them safe rather than being paralysed by what we don’t know and using it as an excuse.

I hope you’ll join me for blog four when I’ll be exploring whether we can still be said to be achieving our mission if it’s all digital.

You can watch the vlog version of this blog below.